Unleashing Teacher Leadership

This field-tested toolkit from the National Institute for Excellence in Teaching gives teacher leaders the practical guidance they need to unlock their own power and drive lasting instructional improvement.

This field-tested toolkit from the National Institute for Excellence in Teaching gives teacher leaders the practical guidance they need to unlock their own power and drive lasting instructional improvement.

Teach for Authentic Engagement

$32.95

Member Book

Premium members receive 9 new books a year. Join today

Member Books

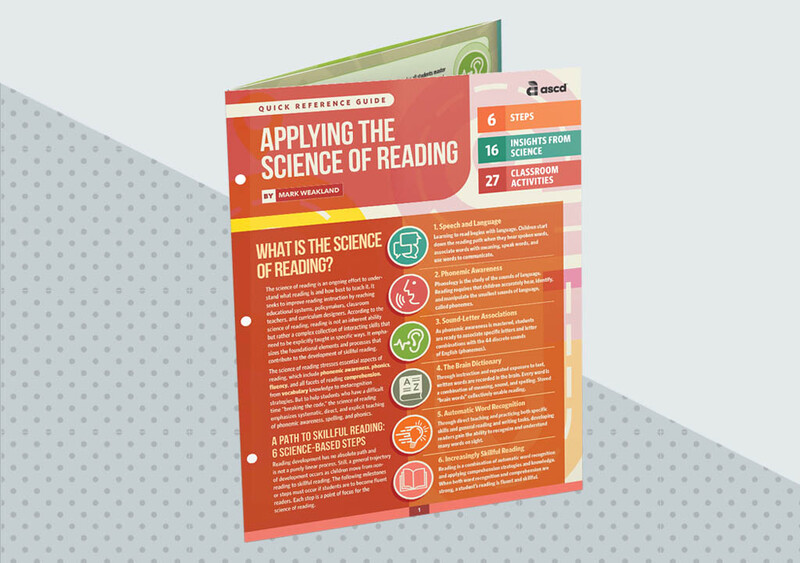

ASCD Leadership Summaries for Educators

Build upon your skills as an educator and leader in a format that fits into your busy day.